21: App Development Lifecycle Series - Develop and Test

Published February 8, 2019Run time: 00:18:56

In Part 3 of our App Development Lifecycle Series, Tim breaks down the Development and Testing Phases. We share how we work with our clients to develop custom mobile app systems, as well as how we test them to make sure they work how you (and your customers) expect it.

In this episode, you will learn:

- How our team gets started with developing a project

- The develop/test cycle

- How to give productive feedback when testing a mobile app

This episode is brought to you by The Jed Mahonis Group, who builds mobile software solutions for the on-demand economy. Learn more at https://jmg.mn.

Recorded January 30, 2019 | Edited by Jordan Daoust

Episode Transcript:

Welcome to Constant Variables, a podcast where we take a non-technical look at mobile app development. I'm Tim Bornholdt. Let's get nerdy.

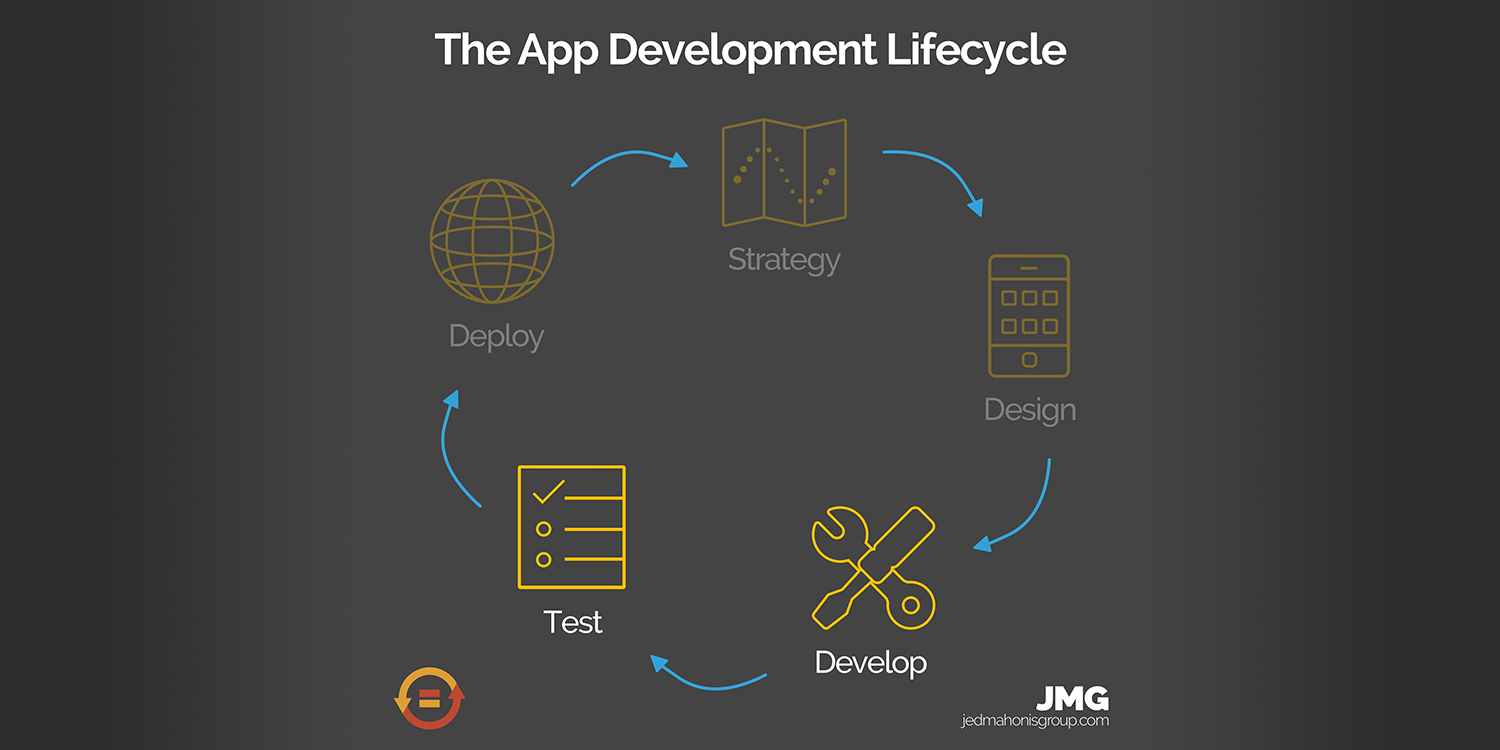

Today we're continuing our mini series on the app development lifecycle with the third and fourth phases, development and testing. At this point in the app development lifecycle, we've completed our strategy phase, which is where we created the user stories, which detail what the app should do. We've also completed our design phase, where we ended up with an asset catalog, which tells us how the app should look and function. So by the end of the development and the testing phases, we should have a fully tested mobile app that is now ready to deploy to your users. And before we get started with the development phase, if we don't already have our environment setup, we need to get that taken care of.

So just some backstory on how our team works. Our typical development teams consist of an iOS developer, an Android developer, a server developer, and a front end web developer. So there's usually somewhere between one and four people working on an app at any given time. And all of those developers have their own responsibilities with their code. So we want to make sure that we have them all set up for success. And we're all on the same page with how all of our processes work.

So the first thing that we do is we give our developers the design, which I think makes sense, got to make sure they know what they're actually building. Next, we give them the user stories, and we give the user stories in a prioritized order. So when we develop the user stories with you during our strategy session, typically, we're making sure that we're going through the app the way that the user would so it's very sequential, and in terms of how the user would create an account and start using the app and so on and so forth. But when we develop an app, our teams usually work, what we would call, asynchronously. So one developer that's building the iOS side of things might be working on one feature while the server developers getting another feature ready. That's very common. So what we want to make sure we do is for each of our developers, we prioritize those tasks so that nobody is kind of sitting around waiting for work to get done. There's plenty of work to do as long as we can prioritize the order so that everyone's working at the best pace possible.

Next, we establish the code repository. So the code repository is where all of the code lives, and we use some software called Git, which allows basically multiple members of a team to make changes to a bigger codebase without the risk of overwriting someone else's work. The last thing you'd want to do is have an Android developer accidentally overwrite something that another Android developer wrote. That would be a total waste of time. So that's why we check in our code into Git. So we use a tool called Bitbucket, which is where we host all of our code. So we basically at this point, make sure that our developers have an empty repository so they can start committing their code. We also take a chance here to review our etiquette because people can use Git in a lot of different ways. There's many different ways that teams can set up and use Git. So especially if we need to bring a contractor on board, we want to make sure that everyone on our team understands what our processes are, so that everyone can just move efficiently. And typically, that's not a problem. But we like to just be very clear upfront, so there's no questions or confusion going down the road.

Next, we set up our Slack team. So Slack is how we communicate with each other. It's basically a big, if you haven't used it before, it's like kind of a texting chat room type app, but with a lot more tools in it. We really only use email from this point on when we need something that needs a little bit more of a paper trail, so things like approving a release or okaying a new user story being added or something like that. Now, sometimes our clients don't really care to be part of this process and be involved with the team on that level of seeing the nerdy conversations that go on back and forth between our team. So if that's the case that the client doesn't really care about being on that team, then we'll just create our own private channel and get started. Otherwise, what usually works out better for us is if we do create a brand new team, and we invite the client on there, and then our project manager and our client can talk directly through Slack. And then our team also can talk so our project manager kind of keeps everything spinning between both parties. So that's really why we prefer to use Slack instead of email is everyone can see what's going on and be aware of where a project is at any given point.

After we've set up our Slack team, the last thing we do is set up our Trello. So user stories are written in a Google Sheet and user stories are meant to really track the big picture of where everything is going. But anyone who's ever managed a large project before knows that, you know, that there's the big goals. But what makes up the big goals is a bunch of smaller goals. And what we found is that Google Sheets works really well for tracking those big things. But for smaller tasks that we need to kind of make sure things are moving along in the right order, Trello, we found, is a much better tool for doing that. So things like bug reports and administrative tasks, like if somebody needs to renew a certificate or something like that, or to keep track of upcoming features that we might want to add in a new release, all those things get stored in Trello. And we invite our client to their project's Trello board. So each of our projects has its own separate private Trello board that only the team members and the client can view. And that way between the Trello board with the small tasks and the Google Sheets with the large tasks, we can all make sure we know where the project is at any given point.

So now that our developers environment is set up and our team is all on the same page, we can actually start writing code So this process here is another loop in and of itself. So bear with me here, I'll go through the whole loop. And we'll kind of take it as as we go. First of all, our developer will take a user story, and they start writing code. Once they have completed the code for that user story they committed to get at that point, our QA team, our quality assurance team gets notified. And they can now run the code and make sure that the user story is met with that committed code. And like I said, I'll get into what they're actually testing a little bit later, when we get into the testing phase. If they find any issues at this point, they create a card in Trello. And take the developer and give them useful feedback, which again, we'll test later. But basically, as soon as the QA gives it the thumbs up and says, yep, the app does exactly what we expect. The project manager now reviews the code, and then they demo the app for our client. And usually at this point, our client has feedback as well. And we want to make sure that that's Incorporated. So we go back and We incorporate the feedback. And once all of that feedback is, is completed, it's been tested, everyone gives it the thumbs up, we mark that story complete, and we move on to the next one. As I mentioned, all of that stuff happens in a loop. It's basically take a user story, write some code, test it, get it approved, move on to the next thing, it's, it's pretty straightforward. It's not easy to do. But conceptually, it's pretty straightforward. And once we're in this loop, while the developers waiting for feedback from from QA, and from the project manager, and from the client, it's not like they stop and hold, they usually just pick the next user story and keep moving. So at any given point, you know, a developer, by the time they get really going on a project, they could be balancing dozens of stories in their head. Typically, a new project has dozens, if not hundreds of user stories. And our team is constantly trying to work together to complete the bigger stories. Everyone has their small tasks that make up the big story. So that's why that prioritized list that we talked about of user stories is really critical to nail at the beginning.

So once our developers finish writing all the user stories, and we're done with the development phase, we now move into the next phase, which is testing. So a quick note on testing. Apps are never bug free. I think a lot of times people come to us expecting that every app that they use is crash free and works perfectly. And while maybe you might see in your use case that things are working perfectly, there's always things that can happen. Users always find a way to use an app in an unexpected way, which leads the app to doing unexpected things, which that would be the definition of a bug. So it might not even be something that the user is intending to do to break an app. I mean, the user's battery could die while they're using your app or their network connection could drop. Typically, something external could happen too, like Apple or Google can update their system and causes a break in what we're doing. So when we're testing an app, what we're doing is, we want to make sure that the vast majority of your users are going to be thrilled with your app. And once it's out there, and we actually can see crash reports, if your users run into issues, we can fix those as we go. But we can, at least through our testing, we can nail down the vast majority of issues before they get out there. And we just want to set the expectation that no app is going to be absolutely perfect and flawless. I think it's dangerous to set that expectation. So we want to be very clear upfront with what we're trying to do is make sure that the app works perfect for as many users as we possibly can.

So what does QA actually do? How do we actually test an app? Well, our testers are looking for two big things. They're looking to make sure that the user stories are met, and they're making sure that they're doing whatever they can to break the app. So let's go over those individually. First, meeting the user stories. You probably have gathered, if you've been listening from the beginning here of our app development lifecycle series, we're really trying to keep those user stories in mind. That's our top priority. And it's critical, because we want to make sure that what we're building meets what you're expecting. And if we have it all written out in plain clear English, that anyone can take it, pick it up and read it and understand it, we can all be very clear on what needs to be delivered. So we make sure that our designers are looking at these user stories, our developers are looking and our testers are looking, and our project manager is looking, then we have several sets of eyes making sure that we're delivering exactly the kind of app that you want to build, and more importantly, the kind of app that your users are going to want to use.

Second, which is probably one of the more fun parts of testing is we're doing what we can to break the app. So again, you know, we can test all day until the cows come home. But we obviously budgets are concerned, we don't want to just continue to test everything all the time. So what we're really looking for when we try to break the app is what are some cases, common major things that people try to do that break apps.

So here's some of the common things. Basically, any place that a user provides input into the app is ripe for breaking things. So things like we've seen, you can probably imagine, we've seen it all. Putting emojis in passwords and usernames, that caused a whole ton of problems. As soon as emojis were widespread and common here in the US, people having cryllic languages like Russian as their default language as opposed to English, or something like Arabic, people putting in crazy, long, unexpectedly long things into text fields. So for example, like a zip code, you're expecting it to be five numbers long and someone puts in the entire text of the Odyssey, that can break something and people do it. People put really, like people put all sorts of things into apps.

The other kinds of things would be large data sets. So let's say for example, you're building an email... I'm still fascinated when I look at other people's phones when I'm going and testing things on different people's devices, and I see the little red number on their email be into the hundreds of thousands of unread messages. People are going to push your app to the absolute limits. And this is what we would call edge case testing. We're testing to make sure that the absolute bounds of what you can expect in the app. We're testing it. Even something like number of friends, if you have a social app, what happens if somebody friends every single person on your platform, how will that look? What happens if they have zero friends? How does that look? Those are the kinds of things we're testing with edge case testing. And really, those are the kinds of things we're testing for with any place that a user provides input.

Another thing we're trying to check for are bad actors. So anytime you can provide input as well into an app, people are going to try to find ways to maliciously break your app. So people will do crazy things like SQL injection, you can look that up if you're interested, even something like flooding multiple posts to your app a second, uploading non images or viruses into any place that you would allow a user to upload an image. Those are kinds of things that we check for as well to make sure that people are uploading appropriate content to your system, maybe not appropriate in terms of an ethical but appropriate in terms of not actually destroying anything on your system.

And finally, we're checking for things related to accessibility. So there's a lot of times people will use the app, not in a malicious way that they're trying to break things, but sometimes just by the nature of different things that they need. They're going to enable different features that the operating system provides them. So for example, if you have a user that does have an Arabic language enabled, and everything gets flipped from right to left, how does your app handle that? Screen readers, if somebody is blind and they need to use Siri voice over to view, to kind of navigate through your app, how does it work inverting colors, making the font super large? These are the kinds of things that we want to check to make sure that it's not going to break your user's experience if they enable these features.

So those are the couple of things that our QA is looking for as they're testing out your app. So just like in the constructive feedback part of the last episode of this series, our developers need quality feedback in order to fix problems. And we really strive to be as clear and concise as possible with what the problem is and how it needs to be fixed. One of the most common things that a first time client of ours will come to us and they'll say that we'll give them a build, and say go ahead and play with the app. And they come back and say, this doesn't work. That is the most unhelpful piece of feedback that we can get. What doesn't work? Why doesn't it work? What were you expecting it to do? What did it actually do? What steps did you take to get there? These are the kinds of things that we actually need from you in order to fix any problems that come up or any issues that we're not meeting your user stories.

So here's what we're looking for during the testing phase when we ask for constructive feedback. First of all, what user story are you testing? Second, what steps did you take to get to the problem? Preferably what we would like is reproducible steps. So if you have an issue with your signup form, you would say I launched the app, I was not signed in, I went to the button and tapped the sign in button and nothing happened. That's a reproducible step, right?

Third, what should have happened? Well, if I tap that sign in button, I should have signed in, right? That's what we would expect to have happen. What happened instead, I tapped the button and nothing worked. And fifth, any images or preferably like a video of you going through and performing that action and seeing that issue, those kinds of things in the hands of our developers will actually result in a problem being fixed a lot faster. And also it will actually, with you telling us what should have happened instead, that really does make sure that we're hitting the mark and delivering the kind of app that you're expecting. So all of those steps that we just took, that's what we're doing during the development phase with our testers as well. We're going through and making sure that the app meets the user stories and any feature that the app should perform, or making sure that anything that you throw at it that was unexpected, the app can handle it gracefully.

So once we've completed all of the user stories, and we're out of the development phase, and now we're into the testing phase, we do what we call a regression test. So what we do is we start with user story number one, and we go all the way to the end. And what you find when you're going through the development phase is, again, things are happening asynchronously, right? So people are working on different tasks at different times. And if you're working on a project for several months, at the very end of the project, something that happened at the beginning of the project might not be exactly what you expected now that we're at the end of the project. So what we do is we go through story by story by story now that we have what we would call a completed app. And we test it one at a time, and make sure that everything still works as expected. Takes a long time, but now that we've gotten through it, we make sure that no stone gets unturned and that every corner of the app is tested, and we make sure that everything happens as expected.

I would love to hear your thoughts on this miniseries. If you have any questions or comments, please do reach out to me. You can get in touch with us by emailing Hello@constantvariables.co or you can find us on Twitter. I'm @TimBornholdt and the show is @CV_podcast. Show notes for this episode can be found at constantvariables.co. Today's episode was edited by the nonpariel Jordan Daoust.

After the app passes our internal regression testing, we do one final demo for you, our client. And this is where we, again, go through, show you every single user story and make sure that you agree that we've knocked this out of the park. And once you've given us your final written approval of the app, then we are done with the development and testing phases.

So coming out of the development phase, we built an app that fulfilled every single user story. And coming out of the testing phase, we've made sure that top to bottom the app has been tested, and we are ready for deployment, which is our next episode.

This episode was brought to you by The Jed Mahonis Group who builds mobile software solutions for the ondemand economy. Learn more at JMG.mn.